In response to a near-universal backlash to their recent usage of AI , Roblox Corporation posted to their company blog. The entry is titled Tech Talks Episode 28: Update on Our Safety Initiatives, posted on August 15, 2025.

”I think there’s always more we can be doing, and we will continue to evolve our policies… to respond to new threat vectors and new forms of abuse,.” Eliza Jacobs–Senior Director at Roblox–states in the video.

This video was allegedly created by AI. Roblox users watching it have noted the unnatural line deliveries and “too-smooth” movements. Roblox has been often critiqued for their blase handling of child predator issues on the site, and users cite the usage of AI in an apology video as yet another example of the company seemingly “not caring” about its own deep-rooted issues. Roblox as a platform has had a storied history of being a haven for child predators across the globe to find “easy” targets. There are a slew of cases where pedophiles have met up with small children on the popular site. For example, a lawsuit was filed against the company on August 22, saying that a 13 year old girl was targeted by a predator in-game.

There have been attempts made to rectify this wide-spread issue. The most recent addition is Roblox Sentinel — an AI-powered system that monitors both users under 13 and users that haven’t verified their ages. The age verification involves taking a selfie that is run through Roblox’s AI. Other sites—such as Youtube—have implemented a similar system to more or less the same backlash.

Parents of Roblox-playing kids are usually not privy to what happens on Roblox, such as Kathy Salerno—mother of senior Riley Salerno, an incredibly avid Roblox player.

“Yes, well, I don’t think it’s reliable… I would think that with AI, something could slip through the cracks,” Kathy said.

Roblox is no stranger to the usage of generative AI, talking about implementing it in September of 2023. This program–called Roblox Cube–could create entire 3D models with nothing but a prompt, but it’s still in active development. They posted to their blog on July 9, saying that “To proactively moderate the content published on Roblox, we have been building scalable systems leveraging AI for approximately five years.”

For reference, the AI boom began in late 2022 with the release of ChatGPT.

The Roblox community has been pushing back against these implementations as they come. For example, user u/WaterBottle001 on Reddit said that “AI is a useful tool—if used properly… But Roblox relies far too much on AI for their decision making when it comes to moderation.”

While most are nothing but critical of Roblox’s usage of AI, some are sympathetic to why they added it. The aforementioned pedophile problem cannot be rectified easily, especially taking into consideration the sheer amount of people that play Roblox daily—approximately 111.8 million users.

At the same time, there have been movements by the user base itself to try and help drive predators off the site. The most recent incarnation of this phenomena are “Roblox Vigilantes,”, users that take a To Catch a Predator approach.

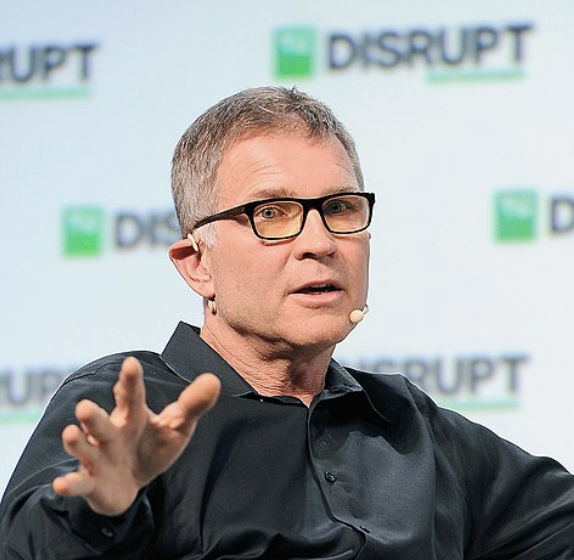

This movement is heralded by a YouTuber named Schlep. He poses as children online in order to prompt predators to meet up in real life—then gets the police involved. His actions inspired groups of “vigilante streamers”, content creators on Twitch and Youtube that livestream their efforts. These groups were then IP banned from Roblox.

In Roblox’s blog entry titled More on our Removal of Vigilantes from Roblox, they elaborate on why they decided to ban the vigilante streamers.

“While seemingly well-intentioned, the vigilantes we’ve banned have taken actions that are both unacceptable and create an unsafe environment for users. Similar to actual predators, they often impersonated minors, actively approached other users, then tried to lead them to other platforms to have sexually explicit conversations (which is against our Terms of Use).”

Users—like Riley Salerno—state that “[The usage of AI rather than real moderators] is just a money-making tool,” Salerno said. “Roblox used to be such a caring website…but even with the money that they have, they won’t fix this problem.”

Not just Salerno, but Roblox users as a whole view the addition of generative AI moderation as the “easy route”, a money-saving option that doesn’t take the actual users into account.

For example, John Shedletsky—the former creative director of Roblox—was banned off of his own site. In a tweet he made, he says that “I got banned on Roblox for content I created 15 years ago. Feels bad man.” The offending content was a link to Twitch.tv.

In summary, the recent Louisiana lawsuit against the company says it all.

“Defendant repeatedly assures parents, children, and the public at large that Roblox is safe for children,.” according to the lawsuit. “ However, contrary to its assurances, Defendant fails to implement basic safety controls to protect child users and fails to provide notice of the dangers of Roblox.”

Never miss important news: every Monday, get a preview of what’s going on this week at Prospect, and what went down last week. To sign up for the Knight Notes newsletter, click here.